If you’ve ever tried to swat a fly, you know that insects react to movement extremely fast. A biologically inspired compound eye is helping scientists understand how insects sense objects and their trajectories with such speed – research that could lead to camera-based 3D location systems for robots, self-driving cars, and unmanned aerial vehicles (UAVs).

Researchers from Tianjin University in China say their device works like an insect’s compound eye – hundreds to thousands of repeating units, known as ommatidia, each act as a separate visual receptor.

“[Insects] might detect the trajectory of an object based on the light intensity coming from that object rather than using precise images like human vision,” says Le Song, a researcher at Tianjin University’s School of Precision Instrument and Opto-electronics Engineering. “This motion-detection method requires less information, allowing the insect to quickly react to a threat.”

Using a Moore Nanotechnology Systems 250 CNC lathe, equipped with a 20µm circular arc diamond cutting tool and Moore’s Nanocam3D software, researchers used the single-point diamond turning technique to create 169 microlenses on the surface of the compound eye.

Each microlens had a 1mm radius, creating a 20mm component that could detect objects from a 90° field of view (FOV). Adjacent microlens FOVs overlapped in the same way that ommatidia do for most insects.

Moore Nanotechnology Systems Vice President of Engineering Paul Vermette says complex optics challenges are driving demand for precision machining methods.

“The precision and controls required to develop and manufacture advanced optical systems used in today’s UAVs, self-driving cars, and next-generation sensor and camera modules have guided Nanotech’s technology roadmap,” Vermette adds. “Ultra-precision, single-point diamond turned surface finishes, with sub-micron form error, require tool manufacturers to build ultra-precise machines with sub-nanometer positional accuracies.”

To test the artificial compound eye’s ability to sense 3D trajectories, researchers added grids to each eyelet. They then placed LED light sources at known distances and directions from the compound eye and used an algorithm to calculate the 3D location of the LEDs based on the location and intensity of the light. The compound eye system rapidly provided the 3D location of an object. However, accuracy declined as distance increased.

“The compound eye could identify an object’s location based on its brightness instead of a complex image process,” Song explains, adding that similar lightweight, low-computing power sensing systems could have many commercial applications.

Moore Nanotechnology Systems http://www.nanotechsys.com

The Optical Society https://www.osa.org

Tianjin University http://www.tju.edu.cn

Latest from EV Design & Manufacturing

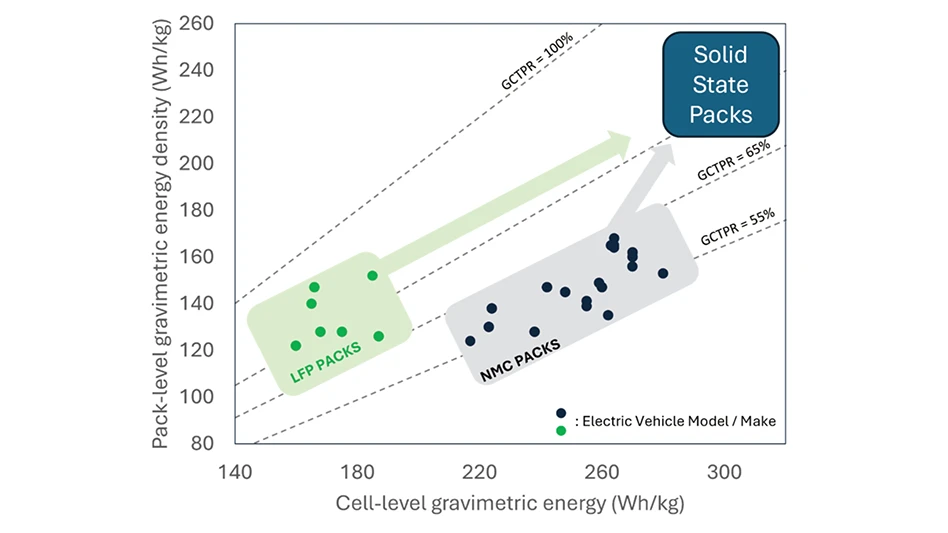

- Riding the current of the solid-state battery movement

- New version of online simulation software dedicated to supercapacitors

- Cutting Edge Innovations: Maximizing Productivity and Best Practices with Superabrasives

- Research yields encouraging data on lithium-ion battery recycling

- Waters Corporation introduces mechanical testing instrument for polymers and composites

- Practical and Affordable Factory Digital Twins for SMEs

- Honda offers a look at flexible electric vehicle manufacturing approach

- Electric vehicle charging solution designed for multifamily communities, homeowners’ associations